GitHub Copilot Assistant, in general availability since June 2022, gave the software development community the first real taste of generative AI for coding tasks.

Two years forward, the market is full to the brink with options. Microsoft launched Microsoft Copilot. Amazon — Q Developer, and Google came slightly late to the party with Gemini AI Coding Assistant. Beyond Big Tech, startups are also releasing gen AI coding tools, based on fine-tuned versions of open-source large language models (LLMs).

The generative AI coding market is booming and set to grow from $18.4 Million to $95.5 million by 2030, despite the dual user sentiment. Some Software Developers became disillusioned with the depth and accuracy of Gen AI coding tools. Other teams continue to integrate the technology into different stages of their software development lifecycle, citing improvements in code quality and development productivity.

So how useful are generative AI tools for software engineering? Do the hyped benefits pan out in complex projects? We discuss all the potentials and limitations of AI-assisted software engineering in our blog post.

AI Coding Assistants: 6 Feasible Use Cases

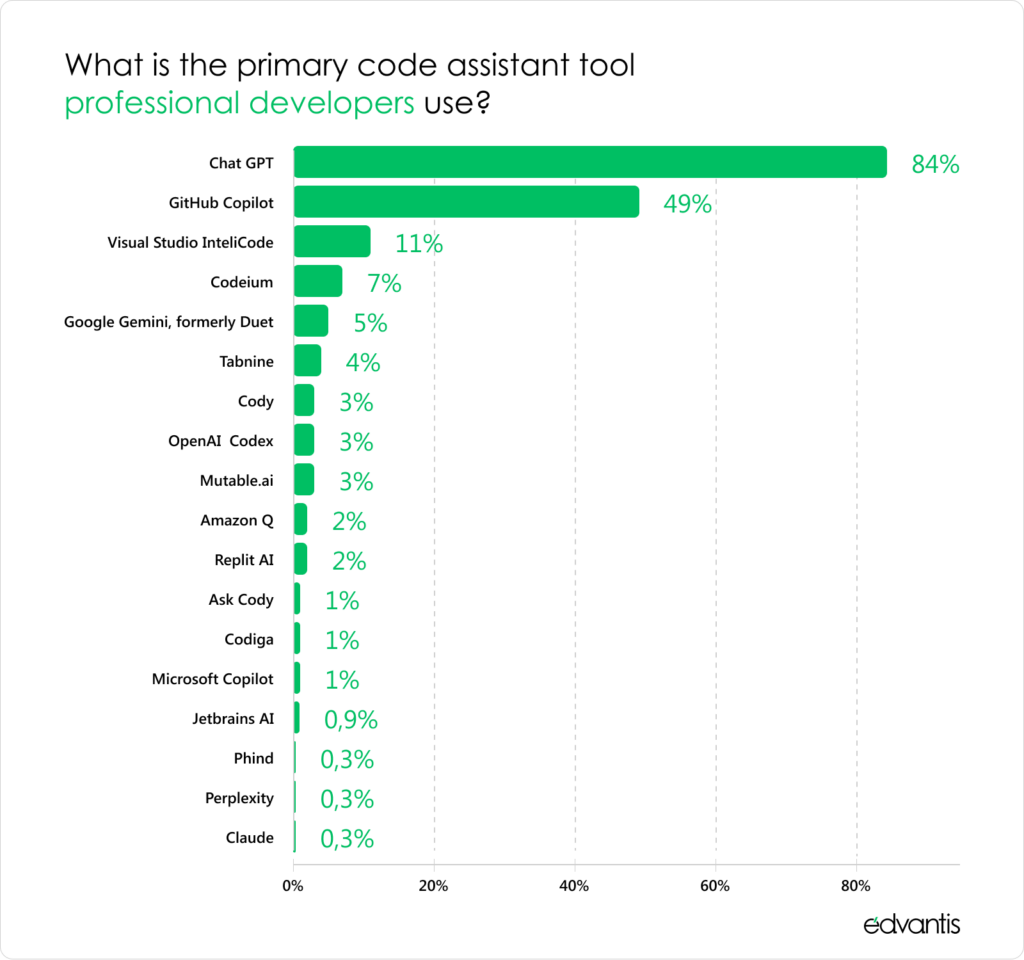

Three-quarters of Software Developers plan to or already use AI coding assistants, according to a StackOverflow community survey. By large slide ChatGPT and GitHub Copilot are in the lead.

Source: Stack Overflow

Enterprises too are on board the Gen AI trend. Gartner says two-thirds are either in the pilot or deployment stages with AI coding tools as of April 2024. The company also predicts that 75% of Software Engineers will use AI coding assistants by 2028 — a big boost from less than 10% of enterprises in 2023.

Why the rush? Studies by Microsoft, GitHub, and MIT all point towards substantial productivity gains in software engineering with AI assistants, mostly by automating tedious, low-value work. And they can streamline repetitive actions across the entire software development lifecycle (SDLC) — from requirements analysis to coding, testing, deployment, and document generation. In addition, some Gen AI tools also offer help in other areas like IT operations, cybersecurity, and data management.

But not everything about AI coding assistants is hunky-dory. There are many advantages, but also some clear limits to how well they perform for popular use cases.

Code Generation and Reviews

Gen AI assistants shine when it comes to repetitive, standardized coding. They’re great at catching formatting inconsistencies, wonky coding lines, and minor bugs. Think of it as typing a document with and without a spellchecker.

Jonathan Burket, a Senior Engineering Manager at Duolingo, one of the early GitHub Copilot adopters, shared that the tool is “very, very effective for boilerplate code” generation. It tab-completes basic classes and functions across programming languages.

According to Burket, Software Developers working with a new repository or framework got a 25% speed boost, and those familiar with the codebase did tasks about 10% faster. GitHub Copilot also helped Duolingo reduce mean code review times by 67% thanks to contextual suggestions.

But there are also some limitations to keep in mind. As Birgitta Böckeler observed, AI coding tools sometimes provide useful in-line suggestions, but in other cases — the tips can be misleading.

The suggestion accuracy varies a lot across tech stack and programming languages. Most LLMs were trained on a large public corpus of knowledge and hence more exposed sample data for popular languages like Java or Python, than something more niche like Lysp or Haskel.

Novice Software Developers may also take all suggestions at face value without cross-checking the data, especially for larger code suggestions, increasing the risks of bugs or introducing unnecessary code redundancies.

One of the trade-offs to the usefulness of coding assistants is their unreliability. The underlying models are quite generic and based on a huge amount of training data, relevant and irrelevant to the task at hand. That unreliability creates two main risks: It can affect the quality of my code negatively, and it can waste my time.

Birgitta Böckeler, AI-First Software Delivery Lead at ThoughtWorks

Code Refactoring

Code refactoring is another painstaking software engineering task where gen AI assistants can add value. They can provide suggestions, based on the company’s coding standards and available documentation, to promote uniformity. Automatic documentation and comments generation also help achieve greater code maintainability. All of this minimizes technical debt accumulation due to idiosyncrasies and structural issues in different sections of the codebase.

McKinsey’s study found that Gen AI coding tools help complete refactoring tasks 20-30% faster, which is something many teams dealing with legacy system modernization would want to strive for.

AI coding assistant Metabob goes a notch further and helps identify more complex problems in system architectures, including concurrency issues, memory inefficient processes, and some 150+ other categories of problems. The graph-attention networks and generative AI, trained on millions of bug fixes, supplied by experienced Software Engineers.

Source: Metabob

Another great use case of AI coding assistants is code conversion between languages, often required as part of re-platforming an application or when building integrations with newer systems.

But there’s always a but. A Coding on Copilot whitepaper from GitClear found that AI assistants may be a co-opt for “bad behavior” like mindless suggestions copy-pasting and introduction of unnecessary redundancies. “Instead of refactoring and working to DRY (‘Don’t Repeat Yourself’) code, these Assistants offer a one-keystroke temptation to repeat existing code,” the paper concluded.

CodeScene team also found that popular LLMs weren’t that great for refactoring. According to Adam Tornhill, founder and CTO, AI failed to improve code 30% of the time. Worse, its suggestions actually broke unit tests about two-thirds of the time. Effectively, AI assistants were changing the external behavior of the code in subtle, but critical ways, instead of refactoring it. Again, this creates issues for junior talent, who may not yet have the skills to catch those problems.

Test Case Generation

Nearly half of the QA teams spend at least nine hours writing one test case for a complex scenario (think complex product logic, multiple integrations, etc). Gen AI can help bring extra automation into the QA processes to enable faster and more thorough software testing.

For example, Katalon offers a GPT-powered co-authoring platform for test creation. The platform also helps analyze TestOPs data to improve coverage rates and test quality. OpenText’s UFT One embedded machine learning advanced optical character recognition to streamline functional test creation time and maintenance.

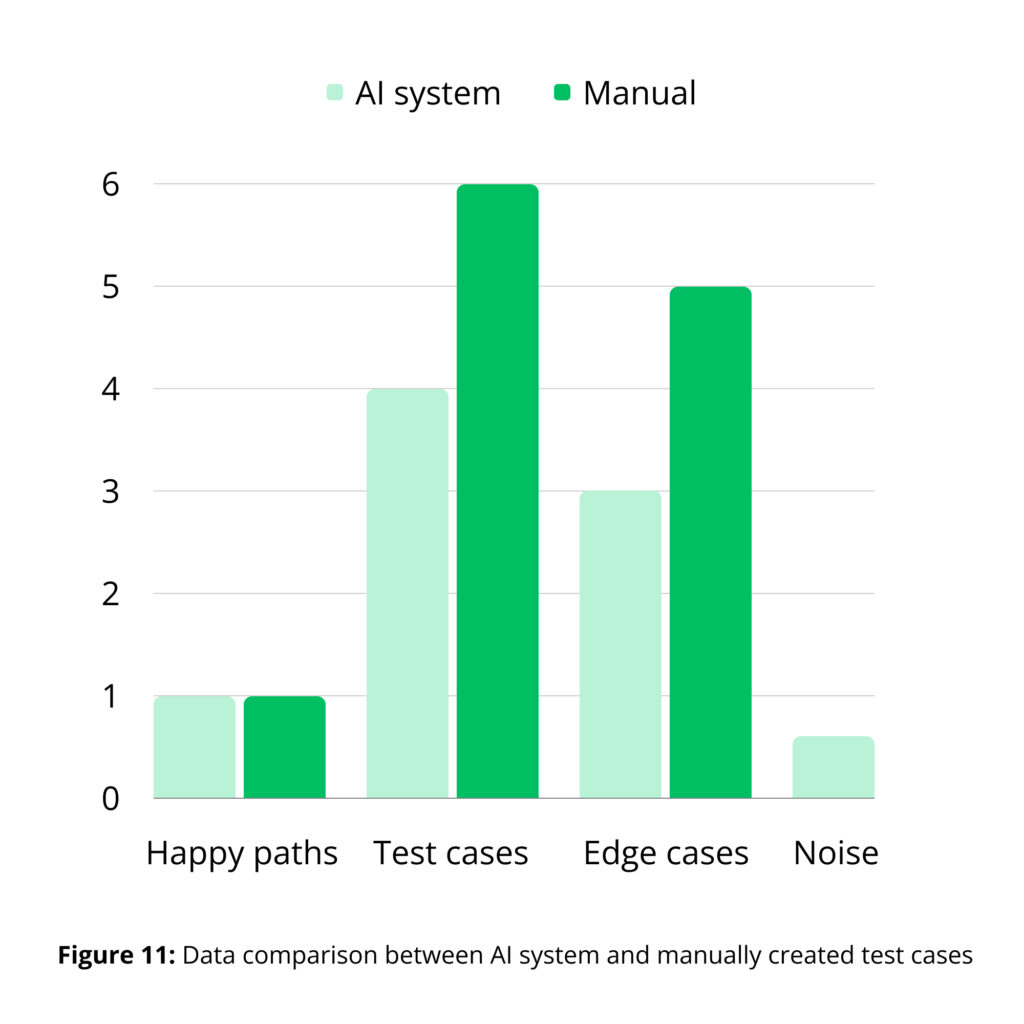

Fine-tuned open-source LLMs also performed well in several test generation scenarios, in a study done by Umea University.

Source: Umea University

AI systems substantially reduced the time for test case generation. However, only 66.67% of the AI-generated test cases were practically useful (though they may still have contained minor noise).

Generally, AI can create accurate unit tests for a well-maintained codebase. But it is hardly useful in test-driven development (TDD) i.e., when the team first creates unit tests and then produces code, capable of passing it.

Data Management Tasks

The flip side of big data analytics is the growing volume of data management tasks on technical teams’ platters. Data science teams can spend a good chunk of productive time on data cleansing and organization tasks like metadata management, data lineage tracking, dataset pre-cleansing, and image annotation among others.

Gen AI slots nicely into those workflows and allows teams to automate:

- Metadata label generation, using the corporate taxonomy for naming conventions

- Lineage information annotation to maintain auditable data trails across the organization

- Data cleansing tasks like automotive “noise” removal of corrupt, incomplete, or poor-quality assets

- Data quality management tasks like records deduplication or data format, type, and values standardization

Informatica recently presented a new gen AI tool for streamlining data management tasks on its cloud data management platform.

CLAIRE GPT helps business users discover the necessary data using natural language commands instead of SQL queries. For the infrastructure teams, benefits include the automatic generation of the first draft of the data pipeline to transform data within the same source instances.

However, to get the most value from Gen AI tools for data management, prospective adopters must already have strong data management processes and supporting infrastructure. This includes scalable data platforms, proper data governance, and existing pipelines for data pipelines for data cleansing, transformation, and uploads. Without these foundations in place, Gen AI data management tools will be of little value.

Security Scans and Threat Investigation

Beyond engineering, Gen AI assistants also help cybersecurity teams maintain better watch over the corporate perimeter. Integrated with popular security platforms, such as tools help alert prioritization, case investigation, and security policy management among other tasks.

Nokia recently released an embedded GenAI assistant for its NetGuard Cybersecurity Dome for telecom security.

Trained on insights from 5G Network architecture, 5G Security practices, and Nokia’s telco domain expertise, the tool can provide accurate information on recommended network architecture configurations, optimal topology, and protection mechanisms against common adversity attacks. The GenAI assistant is expected to reduce the time it takes to identify and resolve a threat by up to 50%.

Cylance Assistant from BlackBerry, in turn, provides a conversational interface for the company’s predictive cybersecurity AI model, Cylance AI, enabling Security Analysts to query security intel using text-based commands.

The wrinkle, however, is that adoption of Generative AI tools often creates new security concerns. A SNYK survey found that security professionals are 3X more likely than C-suite members and significantly more likely than Software Developers to state that AI-generated code was “bad”. This is likely because security teams are often forced to deal with the “aftermath” of poor Gen-AI code, which creates new vulnerabilities and system errors.

Whether you plan to adopt gen AI for coding or other types of tasks, you’ll have to stay vigilant about their security (and the security of the outputs they produce).

Getting the Value from AI Coding Assistants

When it comes to gen AI coding assistance, leaders may need to pack the excitement in favor of long-term strategy. You need first to clearly articulate the problem you’re trying to solve and it should be more precise than just “increasing software development productivity”.

Think about the issues you want to address. For example, “lack of capabilities for unit test case generation” or “delayed deployments due to long peer code review times”. Set specific KPIs to measure the adoption impacts. These can be proxy metrics like “percentage of automated unit test coverage” or “mean peer code review time”. Then evaluate if Gen AI is indeed the best solution — it may or may not be.

Lastly, when evaluating the ROI of Gen AI coding tools adoption, you should also factor in the extra training your teams may need to understand how to best profit from the new system. Senior staffers may be better at figuring out the optimal use cases, whereas junior staff may feel initially empowered to complete more work, but might then get disillusioned with the feedback during peer reviews about the code quality. Out best advice — start with several scoped pilots, analyze the results, and then work out the optimal strategy for progressive technology roll-out.