AI technologies are now easily accessible. Robust LLMs and many machine learning models are either open-source or available via APIs from cloud service providers. Gen AI agents — included in many major enterprise platforms.

Commoditized access to AI smoothened the adoption curve, thus creating adoption enthusiasm. Between 2025 and 2028, 92% of businesses plan to increase their AI investments — and that’s after a substantial cash injection during the past two years.

AI adoption and technology understanding, however, are often at odds. The majority of executives agree that AI is a strategic opportunity for their organization, but only one in four have implemented AI in some way into their offerings and processes. Likewise, only one in twenty has made AI a core part of their offerings or processes.

Slow progress can be explained by low ROI. Among AI adopters surveyed by Gartner, half struggle to quantify the value of AI investments. While AI can (and undoubtedly will) create massive positive impacts for businesses, the success and ROI of these initiatives will heavily depend on leaders’ ability to select and quickly validate the right AI use cases.

6-Step Framework for AI Use Cases Selection and Validation

Companies that succeed with AI adoption (and see justified ROI) don’t start with the technology; they start with the problem.

“Adopting AI for the sake of becoming an ‘AI-enabled’ business rarely works,” says Roman Dovhan, Chief Strategy Officer at Edvantis. “On the contrary, many businesses get the opposite effect: When new technology gets ‘forced upon’ an unfit process (i.e., such that could be streamlined either through simpler automation), it adds extra overheads for the teams.” A recent survey found that 77% of employees say AI adoption has increased their workload, and nearly half (47%) don’t know how to realize productivity gains.

In many cases, businesses try to ‘retrofit’ subpar business processes with AI, which only adds extra overheads and reduces efficiency. Or they approach adoption without a clearly defined business case and set of expected outcomes, leading to poor ROI.

To avoid such scenarios, Edvantis’ AI engineering team suggests using the following six-step framework for selecting and validating AI use cases.

1. Frame a Business Problem

AI, or more precisely, machine learning, deep learning, and generative models, is an ultra-versatile technology. You have different algorithms, like:

- Predictive analytics for property valuations, hospital readmission predictions, or even employee turnover forecasting.

- Natural language processing (NLP) for sentiment analysis, document summarization, and machine translations.

- Computer vision for quality inspections, industrial robotics, and medical imaging processing.

- Optical character recognition for invoice and receipt digitization, document automation, and ID verification systems.

- Recommendation systems for e-commerce product upsells, streaming service content suggestions, and marketing personalization.

- Speech and audio recognition for voice-activated assistants, call center transcription, or emotion detection from voice.

- Generative AI for content generation, document management, and all sorts of ‘copilot’ assistants for coding or customer engagement.

All of these algorithms solve vastly different business problems.

Identify a business or user problem first (e.g., high process execution costs, lack of data for decision-making, etc). Then, consider whether an AI model can solve it effectively or if a simpler alternative (e.g., a BI dashboard or a robotic process automation tool) would do.

To frame your problem, engage stakeholders from various teams and talk to people closest to daily operations to get the gist. By resurfacing grassroots ideas, you can concentrate on your team’s pressing problems and give them a great solution, which then eases the adoption.

Airbus leadership didn’t start by asking how to become AI-enabled. Instead, they started looking into various production issues and then considered various solutions. The company realized that routine production issues were the biggest source of inefficiency. So they trained an AI system that recommends troubleshooting for 70% of production disruptions, leaving just 30% for humans to deal with.

“Strictly speaking, we don’t invest in AI. We don’t invest in natural language processing. We don’t invest in image analytics. We’re always investing in a business problem.”

Matt Evans, former VP of Digital Transformation at Airbus

2. Determine Feasibility

You’ve framed the business problem(s). Now, you need to right-size the solution. Talk to your technical team or external AI experts to determine the feasibility of deploying different algorithms or alternative technology options.

The following factors affect the feasibility of AI adoption:

- Data availability. All ML/DL models need substantial training datasets, plus scalable data pipelines for data ingestion, transformation, and subsequent access. If your data is siloed across different enterprise systems or you rely on legacy ELT processes, you may need to upgrade your data architecture first.

- Algorithm suitability. Has anyone solved your problem before? Simple linear models are easier to deploy than multi-layer neural networks. Are there any open-source models you can adapt and retrain to save on development costs? Fine-tuning AI algorithms for specific use cases is significantly faster and cheaper than producing novel ones.

- Compliance. Are there any limits in your industry for data analytics? In the healthcare and finance industries, customer data needs to be anonymized and encrypted before being sent for analysis. An alternative option is training the algorithm on synthetic data — mock, computer-generated insights — and then validating it on anonymized customer data. Similarly, consider applicable model explainability requirements when choosing an algorithm.

Generally, you’ve got a feasible AI use case when the data is accessible and sufficient in volume. The problem is well-defined and can be solved by customizing or fine-tuning existing models. Lastly, weigh the technical complexity against the business impact to ensure the solution is not only possible but also warrants investments.

3. Prioritize Use Cases for Validation

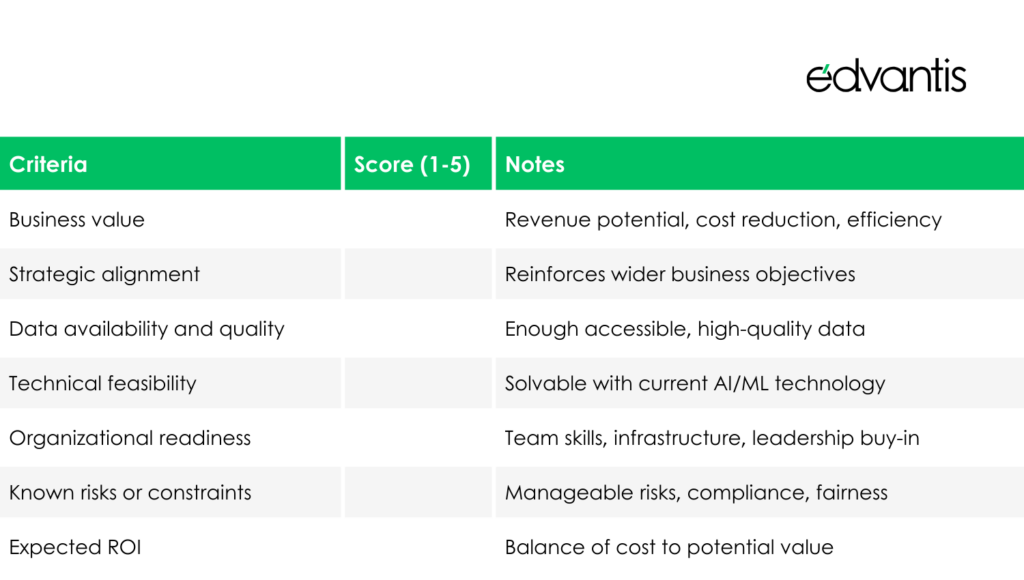

Feasibility studies will reduce the initial list of AI use cases. But you’d still have a long laundry list of ideas. Apply product management principles to prioritize your backlog by grading each use case on characteristics like ‘business value’, ‘technical feasibility’, ‘organizational readiness’, and ‘expected outcomes’.

Sample AI use case prioritization matrix

A good idea is to start with “high-value, low-effort” use cases. This way, you can run a quick validation cycle, documenting the costs, complexities, constraints, and outcomes. During the project retrospective, you can then formalize the best practices; replicate and improve upon them during the next development cycle.

By moving incrementally — from simpler to more complex AI use cases — you can progressively improve your maturity in areas like infrastructure, cloud resource management, and MLOps processes while also delivering ‘quick wins’ for the business.

For instance, Vodafone struggled a lot with its customer service quality. The volume of incoming tickets was too large, leading to overwhelmed teams and high staff attrition. So the telecom prioritized AI adoption in the customer support function and developed a generative AI support agent. TOBi now deals with 1 million customer requests per month, with 70% being resolved at first contact. The remaining 30% of cases get passed along to a human agent with an auto-generated summary.

By addressing the biggest bottleneck first — high ticket volume — with an already proven AI solution (a fine-tuned LLM), Vodafone quickly demonstrated the value of AI for end-users and business stakeholders and likely secured support for more complex initiatives.

4. Set Success Criteria

Before you start AI model development, determine how you’ll measure the success of a pilot. In many cases, businesses struggle to determine the impacts and ROI of AI because they’re measuring the wrong metrics.

To prove the value of your investment, you should measure technical algorithm metrics and indirect business impacts.

Technical metrics like model ‘availability’ (percentage of uptime), ‘recall’ (percentage of actual positive cases identified), or ‘throughput’ (number of tasks, proceed per minute) help evaluate model performance in production.

Additionally, measure security and compliance metrics like ‘model robustness’ (resistance to adversarial attacks) and ‘explainability levels’ (interpretability of model decisions) to stay on the safe side of regulations. For cybersecurity, run periodic reviews of model access control logs, data lineage, and model versioning to detect unauthorized changes.

Business metrics worth tracking will largely depend on the AI use case. Ideally, you should find a good ‘proxy’ measure that indicates whether the model solves the initial problem. For example, you can track how many previously manual processes have been fully or partially automated with AI and resulted in lower error rates or production defects.

Or you can try to approximate operational savings. Schneider Electric measured the impacts of its AI-enabled smart factory by tracking energy costs at individual plants (achieved reduction of 10% to 30 %) and maintenance costs (improved by 30% to 50%), plus improvements in goods’ quality.

Another good metric is new revenue enablement. You can evaluate how AI models impact sales volumes or deal speed. Edvantis helped KPS Labs deploy a prediction system of likely-to-list (likely-to-sell) off-market properties and value ranges for listed homes, which enables real estate agents to make smarter pricing decisions and faster sales.

Workforce productivity gain is another good metric for measuring the ROI of AI. As part of an internal proof of concept (PoC) deployment of its Copilot, Microsoft measured productivity gains among participants. Copilot users completed tasks 26% to 73% faster with a generative AI assistant, with 70% of participants agreeing that Copilot increased their productivity. Positive results from beta users persuaded Microsoft to take the product public.

5. Validate Deployed AI Models

Successful AI adopters start with a limited PoC deployment for prioritized use case(s). In simpler terms, they test an early model version with a limited group of users to validate the initial hypothesis, gather feedback, and fine-tune the model.

Typically, most AI models undergo several rounds of validation:

- Cross-validation — Split data into training and testing subsets to evaluate model reliability and minimize overfitting risks.

- Functional testing — Does the model perform as it should on production data? Compare technical metrics like accuracy, recall, and throughput against public AI model performance benchmarks and internal requirements.

- Performance testing — Measure how the system performs under different workloads (e.g., 10+ concurrent users) and conditions (e.g., varying data quality) in terms of response time and accuracy.

- Usability testing — Do beta users understand how to work with the new tool? Do they need extra training? Do they enjoy the interfaces and overall UX?

Remember: AI model development is highly iterative. It’s normal to have a couple of ‘false starts’, even after you’ve done all the previous steps. Only 20% of all developed AI models end up getting deployed to production. Document your experiments, learn from mistakes, and continue iterating.

6. Scale Top Performing Models

The next step after a PoC is full-scale deployment — aka a public or an internal release to a wider user segment. But that’s when most ‘hiccups’ happen.

Three-quarters of executives admit they struggle to move AI initiatives beyond the PoC stage, mostly because they lack the right processes. Some struggle with aligning AI teams with IT, business, and legal units to ensure seamless deployment. Others lack an established change management process or start experiencing infrastructure-related issues.

At Edvantis, we recommend a three-step path to successful AI model deployment in production:

- Infrastructure. Conduct a readiness audit across your cloud and data management infrastructure. Implement an MLOps toolkit for model monitoring and maintenance.

- People. You’ll need extra roles beyond data scientists to scale and support an AI system — ML/DL engineers, data engineers, AI product managers, and compliance experts.

- Governance. Establish and enforce data governance to ensure data quality, availability, privacy, and compliance. Configure model security and performance alerts to detect model drift and biased outputs early on.

Doing the necessary ‘grunt work’ in these three areas helps you create a predictable path for productizing new AI models in less time and with lower friction.

Conclusion

There is no doubt that AI will (and already has) create massive positive impacts for businesses. The question is: how soon will your company harness them? Building a structured process for AI use case selection and validation, like the one proposed in this post, helps you avoid costly ‘detours.’

Start your AI adoption journey with Edvantis. Our AI team can help you shape your business case and validate feasibility during discovery and then support you with end-to-end model development and deployment. Contact us to learn more about our approach to AI development.